Deploying DeepSeek Locally: Your Ultimate Hardware Guide

Deploying DeepSeek Locally: Your Ultimate Hardware Guide

Deploying the powerful DeepSeek language models locally opens up exciting possibilities for AI development, code generation, and natural language processing (NLP) tasks. However, to unlock DeepSeek's full potential, you need the right hardware. This comprehensive guide provides a detailed breakdown of the necessary components, optimization tips, and key considerations to ensure a smooth and performant local deployment. We'll cover everything from RAM and GPUs to storage and CPUs, helping you build the perfect hardware setup for local DeepSeek projects.

Why Local Deployment Matters for DeepSeek:

Before diving into the specifics, let's briefly discuss the benefits of deploying DeepSeek locally:

- Privacy & Data Security: Keep your data entirely under your control.

- Cost-Effectiveness: Avoid recurring cloud service fees, especially with high usage.

- Customization & Experimentation: Freely tailor the model and experiment with various parameters.

- Low Latency: Achieve faster response times compared to relying on cloud APIs.

1. RAM Requirements for DeepSeek: The Foundation of Performance

RAM (Random Access Memory) is arguably the most critical hardware component for running DeepSeek locally. It directly influences the model's ability to load, process, and generate text efficiently. Insufficient RAM will lead to slow performance, frequent crashes, and ultimately, make local deployment impractical. The RAM requirement will greatly affect the final performance.

- Minimum: For smaller DeepSeek models (e.g., DeepSeek-Coder 6.7B), a minimum of 64GB RAM is likely needed. This allows the server to load the AI model data.

- Recommended: To comfortably handle medium-sized models (e.g., DeepSeek-Coder 33B), 128GB RAM is highly recommended.

- Optimal: For deploying the largest DeepSeek models (e.g., DeepSeek-Coder 66B) and/or running multiple tasks simultaneously, 256GB RAM or more is the best choice. The AI server must use enough RAM.

- Consider the Ecosystem: Remember that the AI server's operating system, runtime environments (e.g., Python, PyTorch), and the inference process itself also consume RAM. Factor this into your capacity planning. The model and its associated environment work on RAM.

- Scalable solutions are available.

2. GPU Power for DeepSeek: Accelerating the Inference Process

While RAM provides the space, GPUs (Graphics Processing Units) provide the power to accelerate the inference process, significantly reducing the time it takes to generate text responses. GPUs are critical for running AI models.

- Minimum: A high-performance GPU is a must. Consider options like NVIDIA's RTX 3090 or equivalent.

- Recommended: Aim for NVIDIA's A100 or H100 series, or comparable professional GPUs, for the best performance. These GPU can help with code generation task.

- Optimal: Multi-GPU configurations are highly recommended for substantial performance gains, especially with larger DeepSeek models. Imagine configuring 2-4 GPUs, to help improve AI.

- GPU Memory is Key: Ensure your chosen GPU has sufficient VRAM (Video RAM). The GPU's memory is very important. The GPU's memory needs to hold model parameters and intermediate results.

- Consider the Workload: The nature of your intended workload will also influence GPU choice. For AI training or more complex NLP task, you will need a very high-performance GPU such as the NVIDIA H100.

3. Storage Solutions for DeepSeek: Speed and Capacity

Storage affects not only the model loading time but also the efficiency of data processing and temporary file management during operation. You'll want to use efficient storage on your AI server.

- Minimum: A fast SSD (Solid State Drive) with at least 1TB of storage is essential.

- Recommended: Opt for NVMe SSDs for significantly faster read and write speeds. This will dramatically speed up model loading and data handling.

- Capacity Considerations: The required storage capacity depends on the size of the DeepSeek model files, any associated datasets, and the need to store intermediate results.

- Think About Data: Storage is crucial to the overall system.

4. CPU Considerations for DeepSeek: The Supporting Role

While the GPU shoulders the primary computational burden, the CPU (Central Processing Unit) plays a crucial role in managing the overall inference process, loading the model, data pre-processing, and orchestration. The CPU supports the AI server and manages its operations.

- Multi-Core and High Clock Speed: Choose a CPU with multiple cores and a high clock speed to efficiently handle these supporting tasks.

- Intel Xeon or AMD EPYC: Consider CPU from the Intel Xeon or AMD EPYC series for their robust performance and enterprise-grade reliability.

- Match to Your GPU: Make sure your CPU doesn't bottleneck your GPU – a balanced configuration is essential.

5. Networking Needs for Local DeepSeek: Bandwidth Considerations

Although local deployment means you’re not relying on an internet connection for inference, network connectivity is important for several reasons:

- Data Transfer: If you are moving data sets or the model itself onto the AI server, network speed will affect loading times.

- Remote Access: You may wish to access your locally deployed DeepSeek server from a remote machine.

- Multi-Server Setups: Consider using faster networking if you are distributing the model on a scalable setup with multiple servers.

- High Bandwidth: Use a Gigabit Ethernet connection or better, with consideration to faster options like 10GbE depending on needs.

6. Optimizing Your Hardware for DeepSeek: Beyond the Basics

Once you have chosen your hardware, there are several additional steps you can take to optimize performance:

- Operating System: Choose a Linux-based operating system (e.g., Ubuntu, Debian) as it generally offers better performance and compatibility for AI workloads.

- CUDA & Drivers: Install the latest NVIDIA CUDA drivers and the appropriate CUDA toolkit to enable GPU acceleration.

- Deep Learning Framework: Select a suitable AI framework like PyTorch, which has great performance, and ensures compatibility with the GPUs.

- Hardware Monitoring: Use hardware monitoring tools to track resource usage (CPU, GPU, RAM, storage) and identify potential bottlenecks.

- Model Quantization: Explore model quantization techniques to reduce the memory footprint of the DeepSeek model, which can help improve performance for AI and reduce RAM requirements.

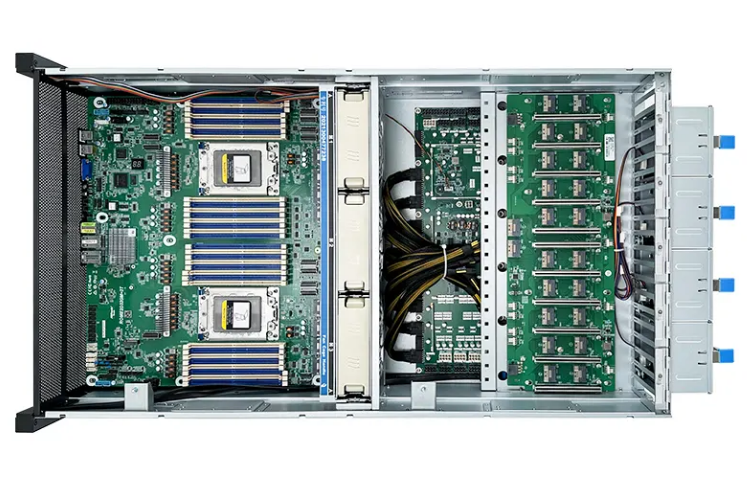

7. The AI Server Chassis: Physical Design and Cooling Solutions

The server chassis is often overlooked, but it is vital for managing the temperature and maintaining the health of your hardware. This involves the design of your system.

- Cooling: Sufficient cooling is critical, especially if you’re using multiple GPUs. Look for chassis with excellent airflow and consider water cooling solutions. Liquid cooled systems are usually the solution.

- Space: Your chassis needs to accommodate the selected components (multiple GPUs, storage devices, and so on). Consider a 4U server configuration for maximum flexibility.

- Power Supplies: Ensure the power supply is sufficient to handle the GPUs, CPUs, and other components.

- Modular Design: Modular designs can simplify maintenance and upgrade the system.

- Onechassis is the professional AI server chassis supplier, they can offer the best ai server chassis for you.

8. The Local DeepSeek Deployment Process: Step-by-Step Guide

Once you have the hardware in place, you can use this step-by-step guide:

- Install the Operating System: Install a Linux-based operating system on your server.

- Install GPU Drivers and CUDA: Follow the NVIDIA guide on setting up CUDA and compatible drivers.

- Install Deep Learning Framework: Install a deep learning framework, like PyTorch.

- Download the DeepSeek Model: Download the DeepSeek model files.

- Load the Model: Load the model and its associated packages into the environment you set up in the earlier stages.

- Configure for Inference: Set up the inference environment.

- Test Your Deployment: Ensure that your installation is ready to go.

9. Troubleshooting Common Deployment Issues

Even with the right hardware, you might encounter some challenges. Here are some common issues and how to address them:

- Out of Memory Errors: Increase the RAM or try model quantization.

- Slow Inference Times: Verify the GPU drivers are correctly installed.

- Hardware Compatibility Issues: Review compatibility issues for your GPU and other components.

- Overheating: Invest in better cooling solutions and monitor temperatures.

10. Building Your AI Infrastructure: Long-Term Considerations

As your DeepSeek projects grow, consider these long-term factors:

- Scalability: Plan for the potential need to expand your hardware resources as your usage increases. You might need to use a scalable AI server.

- Maintenance: Design for easy access and serviceability of components.

- Power Consumption: Monitor power usage and explore energy-efficient hardware options.

- Budget: Balance the cost of hardware with the long-term value it provides.

11. Where to Purchase Hardware for DeepSeek

Choose reliable vendors for your hardware purchases. Consider:

- GPU: Check with NVIDIA, your preferred retailer.

- CPU and RAM: Shop with Intel, AMD, and other manufacturers.

- Storage: Shop with Western Digital, Samsung, and other manufacturers.

12. The Future of AI and Local Deployment:

Local deployment of AI models like DeepSeek represents a crucial trend in the future of AI. As models become more powerful, and AI becomes more integrated into our daily lives, the need for privacy, control, and low-latency access to these technologies will continue to drive innovation. This means that having your own AI infrastructure has value. The use of AI servers will grow.

Conclusion: Powering Your DeepSeek Dreams

Choosing the right hardware is vital for successfully deploying DeepSeek locally and accelerate your AI journey. By carefully considering the RAM, GPU, storage, and CPU requirements, and implementing best practices for hardware and software configuration, you can unlock the power of DeepSeek and create amazing AI-powered applications and solutions. You can use AI for code generation and NLP.

Key Takeaways:

- RAM is Paramount: Prioritize sufficient RAM for the chosen DeepSeek model.

- GPU Acceleration is Essential: Select powerful GPUs to speed up inference.

- Fast Storage Matters: Use fast NVMe SSDs for quick loading.

- Choose a High-Performance CPU: Choose the CPU to support AI.

- Plan for Cooling: Invest in robust cooling to prevent overheating.

- Scale Smartly: Consider future hardware expansion needs.

- Build a Stable System: Choose a reliable server chassis and power supply.

- Follow a Structured Deployment: Then your deployment is going to be perfect.

- Call us for assistance.

By following this guide, you'll be well-equipped to build your own AI server and deploy DeepSeek locally, opening up a new world of possibilities. Remember to call us if you need further assistance!

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones